A Very Deep Dive into Tesla - Part 2 Tesla Full Self Driving and Autonomous Driving

Is Tesla the next most valuable company in the making? Or is Tesla the biggest bubble ever?

DISCLOSURE: Not investment advice and only for your entertainment purposes! I do have a position in Tesla stocks.

Tesla Full Self Driving – Future Robo Taxi?

Tesla full self-driving is arguably the most excited yet controversial of Tesla. Tesla aims to solve the autonomous driving problem and provide a Robotaxi (taxi without a human driver) network. This network will allow Tesla and all Tesla owners to earn revenue off their Tesla as a human-less taxi when they are not driving it. If Tesla can solve the autonomous driving problem, it will be able to generate substantial revenue because Tesla will be able to undercut all Taxi/ride-hailing providers as human driver costs is the biggest cost component of a ride-hailing/taxi service.

Market Size

According to Ark Invest, the ride hailing market today generate roughly 150 billion in revenues globally. The autonomous ride-hailing market however could expand the total market from $150 billion in revenues to$6-7trillion in 2030. You may wonder how is a 40x increase in ride hailing market size possibly? It is possibly because without a human driver, a robotaxi may only need to charge 50cents per mile compare to $2-$3 dollars of average cost of ride sharing. With such lower price point, people may start to take ‘robotaxi’ every day to work, to school and to buy grocery. People may even take the robotaxi instead of public transport. Ultimately, if the cost is low enough, people may even not buy a car and just take a robotaxi every day. All these may sound impossible to you, but I invite you to read the following sections and make a conclusion yourself, as this is critical for Tesla’s future. Let’s know get into the technology behind Tesla’s Full Self Driving and see how they are tackling this challenge.

Tesla’s prediction of the average cost to run a robotaxi

Vision Approach vs Lidar Approach

There are two main approaches that people take when tackling the autonomous driving problem, the Lidar approach, and the vision approach. Tesla uses the vision approach, whereas all the other existing players in this field uses the lidar approach. The vision approach uses cameras to be the ‘eyes’ of the car. In contrast, the Lidar approach, on top of cameras and radar, also uses Lidar to be the eyes of the car.

The main benefits of having a Lidar are better depth detection and localization. Lidar shoots out lasers and creates a lidar point cloud map that measures the distance between the car and its surrounding (illustrated below). The lidar point cloud map supplements camera vision, allowing the car to better recognize the distance between the objects in the vicinity of the car and itself. Most companies going with the Lidar approach has also chosen to use HD maps to supplement their cars’ ‘eyes’. HD maps can be accurate to a couple millimeters. With localized HD maps, lidar and the maps work hand in hand allowing the car to know exactly where it is, and the exact path ahead of itself. This is effective in preventing the car hitting any stationary objects that are pre-map into the HD map.

Lidar point cloud compression – very accurate

You may ask by now, if Lidar provides two ‘great benefits’, why does Tesla not use Lidar. There are two main reasons. Firstly, cost. The Lidar used on cars is very expensive. Although one main Lidar players, Waymo has cut its Lidar costs by 90%, it still cost around $7500 per car. This amount as an add on to an average car is pricey. HD maps are also expensive. HD map has a unit price at least five times higher than a traditional navigation map. Furthermore, HD maps also requires frequent updates to reflect the most current road conditions. The process of mapping a HD map is also slow. These cost factors make scaling Lidar based autonomous driving vehicles difficult (at least in the near term where the prices of Lidar and HD maps remain high).

Secondly, Tesla and Elon believe that it is simply not necessary to use Lidar (lasers) to drive. Human drives with vision, and humans do not shoot out lasers to detect range. So why do cars need Lidar? Moreover, the current driving system is built for drivers with vision not lasers. Therefore, if the vision system is good enough why bother with Lidar? Another interesting fact is that Elon actually knows about Lidar quite well. In Elon’s other company, Space X, Elon, and his team even developed their own Lidar and deployed on there rockets. Therefore, signaling his active decision/believe that Lidar is unnecessary based on his extensive knowledge with Lidar.

With Tesla’s vision approach, Tesla believes that in the coming years, Tesla’s vision system will be good enough that other car manufacturers cannot justify the cost of having a Lidar on a car. A counter argument is that having Lidar on top of cameras (only for depth detection) will still be safer. However, the question is, once autonomous driving achieves a level of safety, for example, 1 accident per 10 billion miles, will people pay more for a lidar that achieves 1 accident per 20 billion miles? History has shown that people will not pay for more a safer air ticket compare to a normal one, because the normal one is already ‘safe enough’. Thus, meaning that if Tesla’s vision approach is safe enough, lidar would only be adding redundant cost.

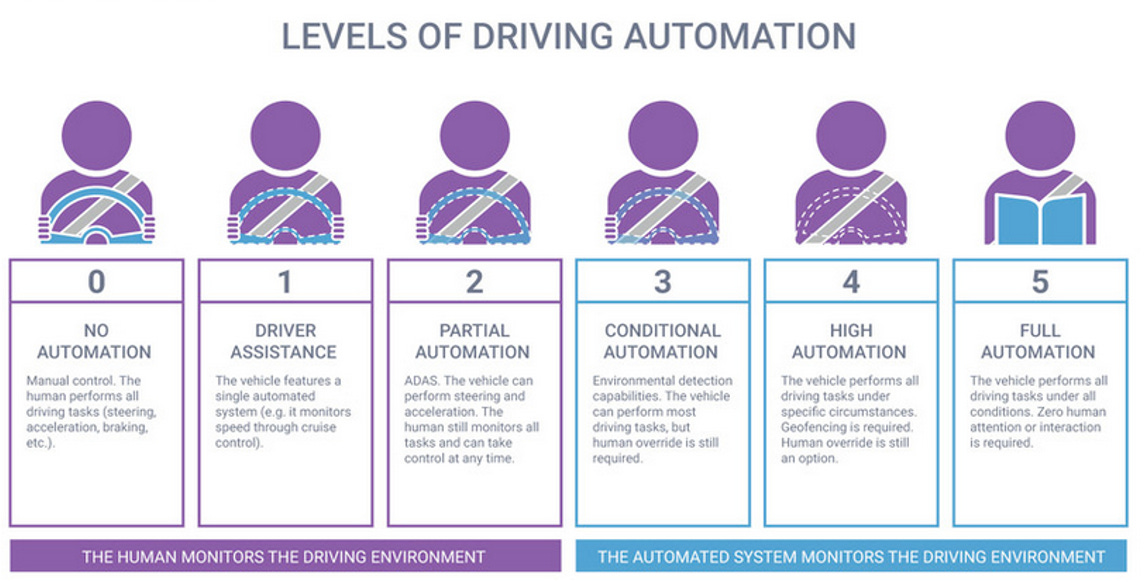

Currently, it is unclear when Tesla and other Lidar approach companies will achieve level 5 autonomy (see below). Both approaches are likely to solve autonomous driving, but the million-dollar question is who will solve it first? Currently, Lidar approach companies, such as Waymo, has already achieved level 4 autonomy in phoenix, whereas Tesla is only at level 2 from a legal perspective (although some reviewers have suggested tech-wise, Tesla full self driving (FSD) beta may be level 3 or even 4).

Source: Synopsys

Both approaches face their individual challenges. For Lidar approach companies, it is the cost of Lidar and time required to HD map the whole world. For Tesla, the challenge is that the ‘brain’ of the car needs to be so good that it is at least as good as a human or better than humans. However, the benefits of the Tesla approach is its scalability, whereas the Lidar approach is difficult to scale (see below).

Source: Ark Invest

Tesla Neural Network

The most important part of Tesla’s vision approach is that a Tesla need to have an excellent ‘brain’ that makes the correct driving decisions every time. Technically, this ‘brain’ is constructed with many neural networks. A neural network is a series of algorithms that takes multiple input and predicts an output (see below for an example of a neural network). Tesla uses many neural networks to predict in real time what is surrounding a Tesla car, and thereby making the correct driving decision. For instance, a Tesla neural network may ask; is there any obstacle in front of my Tesla from the visuals collected from the cameras?

Source: Singularity Financial

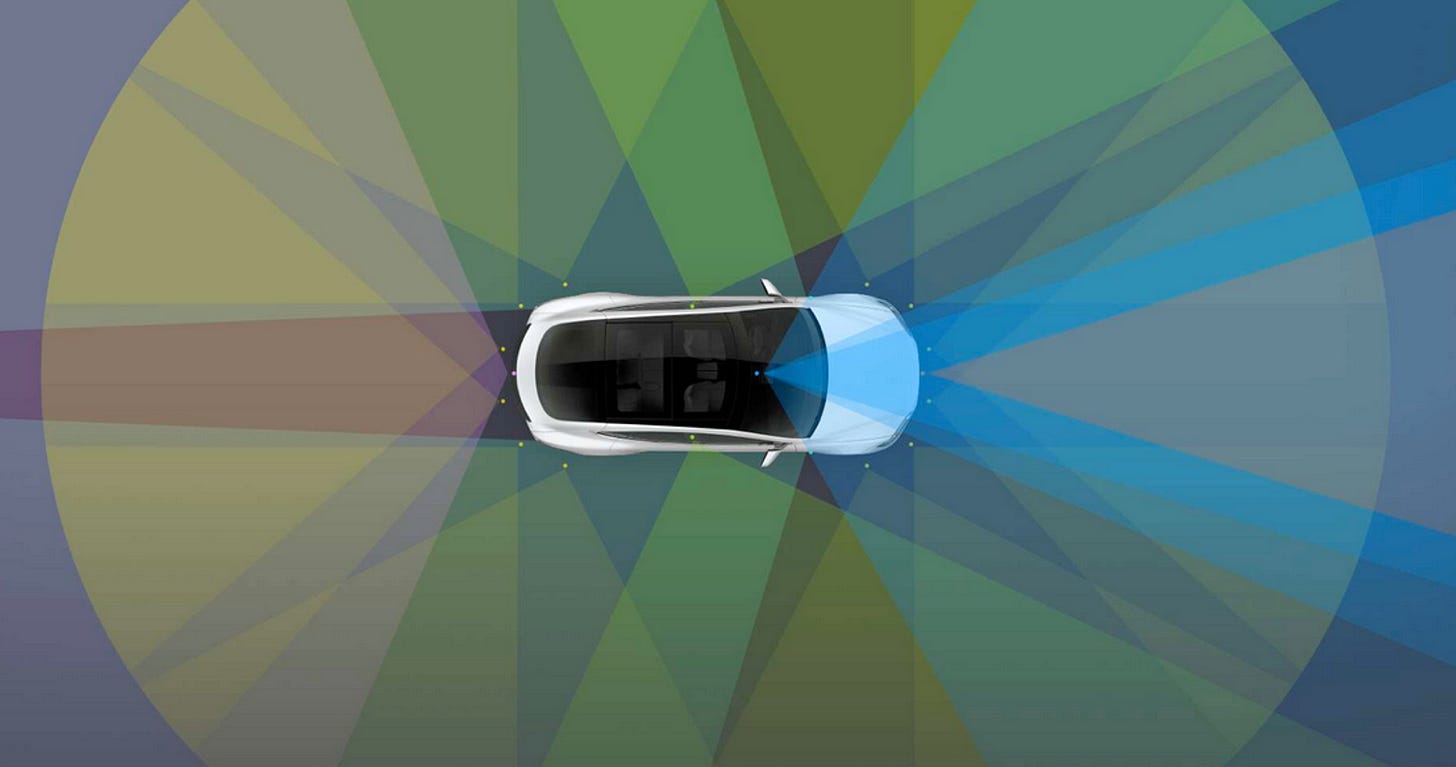

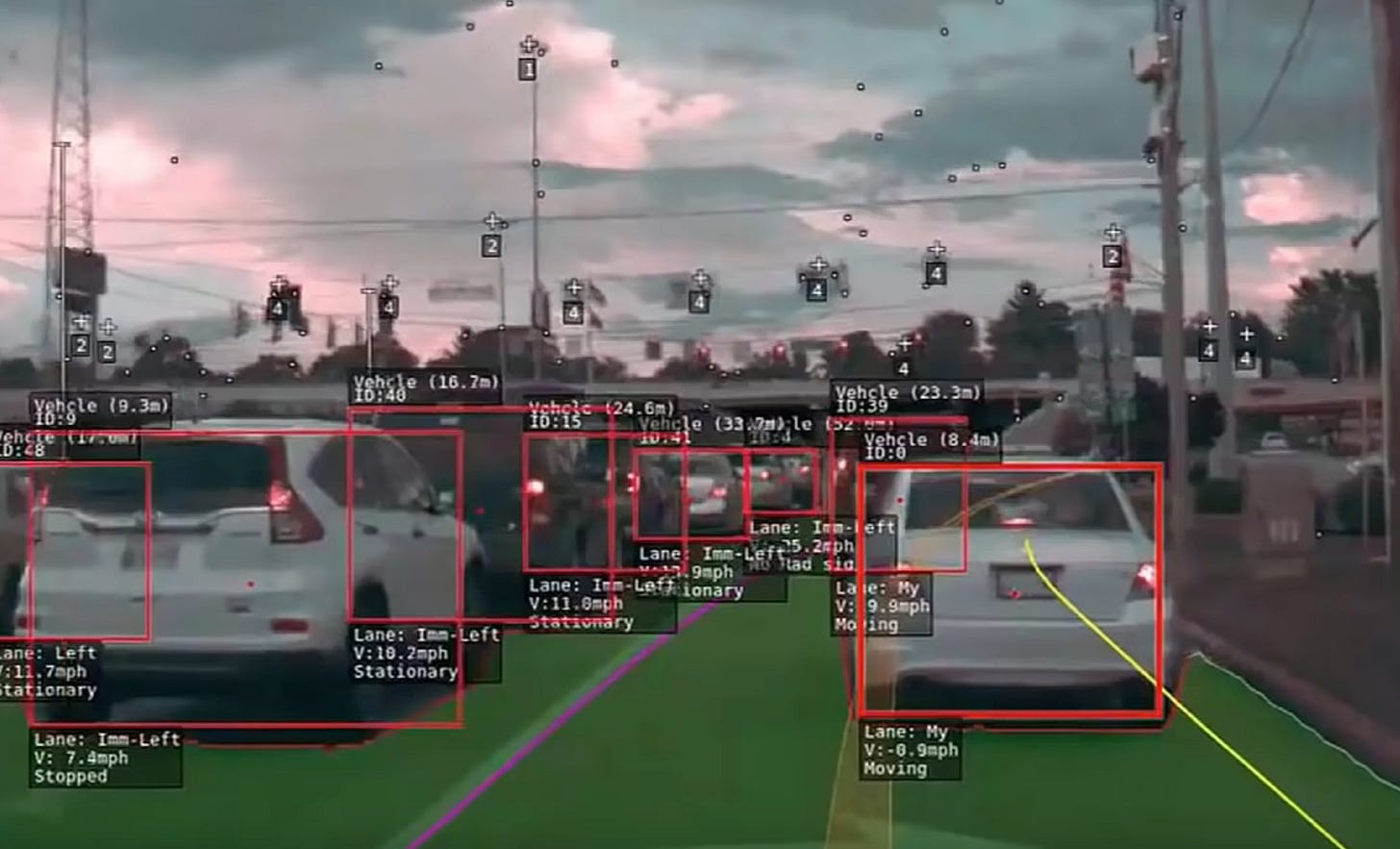

For a Tesla neural network, the inputs are the visuals collected by the cameras, the in between layer is the prediction process based on calculations, algorithms and codes, and the output is a prediction of what the perceived object is by the camera. The ‘brain’ of the Tesla will then gather all the visuals and predications and construct the surrounding of a Tesla. Afterwards, the brain will then try to make the correct driving decision. A real-life example is shown below. This prediction process is what Tesla needs to refine and prefect. Currently, the inputs come from 8 cameras, 1 radar (soon to be removed) and 12 ultra sensors for a 360-degree view from a typical Tesla car.

Source: Tesla

The goal for Tesla is to make all its neural networks to a point where the predicted outcome returns the same answer as a human will return every time. For instance, if the input (visuals) is a person walking across the street, the correct predicted output should be a person walking across the street. This is essential because the car’s brain can only make the correct driving decision based on a correct understanding of its surrounding.

To achieve such accuracy, these neural networks must be trained by taking inputs to predict an output. Once an output is predicted, Tesla can verify whether the predicted output is correct. If it is not, Tesla will then adjust the in between layer aka the prediction process and teach the neural network to predict the correct output. At the start, almost all predictions will be wrong, because the neural network is like a baby’s brain without any knowledge. It was even hard for a neural network to predict whether a dog is a dog or a cat in the beginning. To perfect the prediction process, it will need to repeat the prediction process many times. Eventually, the prediction process will become very accurately with many tries.

Neural nets usually start relatively slow but have exponential growth when it learns from many data. The field usually outperforms the expectation. An example of how good a neural network can become is Google’s Alpha Go project. Alpha Go is an AI that uses neural net to play the chess game, Go. At the start, Alpha Go cannot even beat a beginner. However, after several years, it can even beat the best Go players in the world. This example demonstrates the power of machine learning and neural nets.

Source: The Median

A way that Tesla trains their FSD neural network is by using probabilities. For example, if the car receives a visual of a blurry stop sign, it will automatically calculate what percent of chance is that blurry sign a stop sign. Then it will compare that calculate probability to a benchmark. For example, if the calculated percentage is over 98% the vehicle must stop. Then if the calculated percentage is 95%, it will not stop. If such prediction is incorrect, the human driver will intervene, and Tesla will be notified with the incorrect prediction. Afterwards, Tesla can fix this issue by changing the percentage or calculation process and teach the neural network to predict the correct outcome.

Source Tesla

Tesla’s Supercomputer - Dojo

Tesla FSD neural networks predicts what an object is, and many predictions must be made before the neural networks become very accurate. In order to teach the neural network, someone must verify whether the neural network’s prediction is correct. If the prediction is incorrect, the person must manually label the correct object. For example, if the neural network predicted a stop sign incorrectly as a slow sign, a manual labeler must fix this issue by overriding the slow sign label and label stop sign correctly. A real-world example is shown below. Although manual labelers can perform the task very well, manual labelers are expensive and not cost efficient, because many frames and many objects need to be labelled. Therefore, Tesla came up with a solution, its own supercomputer, Dojo.

Source: Tesla

So, what is Dojo? In the words of Elon, Dojo is a super powerful neural network training computer. The goal of Dojo is to take in vast amounts of data and conduct massive unsupervised training with the neural networks. In other words, Dojo acts as the ‘human labeler’ and oversees the prediction process. Dojo supercharges the learning speed of the neural networks significantly. Currently, whenever Tesla collects data or visuals, dojo will pre label many things in the frames, and the human labelers will only need to fix the errors made by Dojo instead of labelling everything from scratch. Dojo decreases the cost of computation and labelling by an order of magnitude.

Whenever a Tesla collects a video or visual from their car, Dojo have the ability transform one frame into a 3D video animation. This allows the neural nets to have the full sequence and ability to see forward and backward instead of just one frame. Dojo enables the neural net to conduct self-supervise training. For example, the neural network can make a prediction in frame one (the collected visual) and self-check the prediction in frame 20. This process can be conducted many times over all collected visuals, to improve the speed of learning. The other benefit of Dojo is that it allows the neural nets to know that an object is behind other objects. For example, if there is a person walking through a street from frame 1 to 120, and in frame 121, the person is now fully behind a car that is parked beside the road, without Dojo, just by looking at frame 121, the neural nets will not know that there is a person behind the car. However, with Dojo, the neural nets can tell that in frame 120, the person is slightly beside the car, and in frame 121, the person must be behind the car because he cannot just disappear within one frame.

Potentially Licensing Dojo Out?

In the future, if Tesla wants, they can license Dojo out as a solution to machine learning and allow others to use this powerful self-supervised neural network training tool to solve other hardcore AI problems that requires many trials and error. As AI becomes a larger part of our life in the future, this service has the potential to become an essential tool for AI development.

The Data Advantage

The more data you feed into an AI (the neural nets), the better the AI gets. Tesla have the most amount of real-world data by far to train their neural net when compare to their competitors. Tesla currently have around 1 to 2 billion miles of real-world data compare to 6.1 million miles from Waymo, its closest competitor in autonomous driving. That is a massive 166x to 300x+ difference.

Source: Lex Fridman

Currently, since the neural nets are much more mature than before, many of the objects can be labelled and identify successfully with Dojo almost every time. Most problems arise in some specific or one-off situation that have yet been experienced by the neural network before. When such problem arises, Tesla will gather the specific data that it has in the Tesla fleet. For example, if the neural nets failed to label a stop sign except right turn correctly, Tesla will try to find all the data it has for stop sign except right turn as illustrated below. Tesla will then use this data and insert them as inputs into the neural nets, to improve the neural nets’ ability to detect this specific sign next time when a Tesla encounters it.

Source: Tesla

Tesla competitors do not have as many miles driven with cameras to form a comprehensive database. This makes it harder for them to solve this kind of specific problem. This may be another reason why other competitor does not use the vision approach but the Lidar approach, because all of them fundamentally do not have that many data to train a neural net and make it accurate.

The Bird’s Eye View

Tesla had started with a field of view and geometric approach when first developing its FSD. Tesla has later switched to use the bird’s eye view, which is a big improvement. Below is an illustration of the bird’s eye view compare to the old geometric approach based on field of view. Bird’s eye view is a unifying top-down view of the Tesla car’s surrounding in one frame constructed by combining all the cameras’ views. Birds eye view asks a Tesla car, what does the current surrounding look like, if we look at this car and its surrounding from a top-down view. Bird’s eye view asks all the cameras to reconcile with each other and provide a holistic understanding of the car’s surrounding instead of multiple camera vision separately.

Camera view at the right, which is not very useful compare to the bird’s eye view on the left

Source: Tesla

How does the bird’s eye view works?

Source: Dave Lee on Investing and James Douma

The bird’s eye view utilizes temporal integration, so the cameras are looking across time in addition to static frames. This means that there is a continuity between what the camera sees a second ago and now. This allows the neural nets to perform consistency checks. Furthermore, the bird’s eye view can tell the neural nets how fast the surrounding objects are moving because there is a time element instead of only static frames.

By using the birds’ eye view, it provides a ‘map’ of the surrounding, making it easier for the programmers to write codes to control the car than a field of view. The bird’s eye view also allows the neural network to understand that the world is three dimensional, because a top-down view is only possible, if the world is 3D. This was initially very hard for the neural nets to learn because pictures and videos are all 2D from camera’s point of view.

Example of Bird’s eye view, with different color representing different things

Source: Matroid

The Pseudo Lidar – Tesla’s Solution to Not Using Lidar

As aforementioned, Lidar helps with depth measurement between objects and Tesla had decided to not use Lidar. Tesla’s solution to having comparable or better depth measurement is by using the ‘Pseudo Lidar’. In the words of James Douma, Pseudo Lidar shows a picture to the neural network and asks the neural network to tell what a Lidar will see in this picture. With the Pseudo Lidar, Tesla tries to teach the neural network to look at a picture and accurately guess how far an object is away from the car. Because Tesla have dojo, this process can be self-supervised and cost effective. For example, if the neural network guess that a car in front of a Tesla is 25m away in frame 1, dojo can use future frames to validates whether this prediction was correct. By using the pseudo lidar, it also makes the bird’s eye view and the whole visualization package more comprehensive.

The Pipeline of Pseudo Lidar

Source: ‘Pseudo-Lidar from Visual Depth Estimation: Briding the Gap in 3D Object Detection for Autonomous Driving’

Top: camera view, middle: depth prediction and bottom: bird’s eye view

Source: Tesla

Tesla believes that with adequate training, the neural network, ‘Pseudo Lidar’, can understand depths between objects really well, thus eliminating the need for Lidar. A research paper named ‘Pseudo-Lidar from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving’, claims that the gap between the Pseudo Lidar and Lidar is closing, and if executed well, Pseudo Lidar can substitute Lidar. However, when this will happen is still uncertain. In a way, Tesla is betting that the gap between the pseudo lidar and a real lidar will close very soon, and other competitors are betting that they will not close soon.

More Examples of the Pseudo Lidar in Action

Source: Tesla

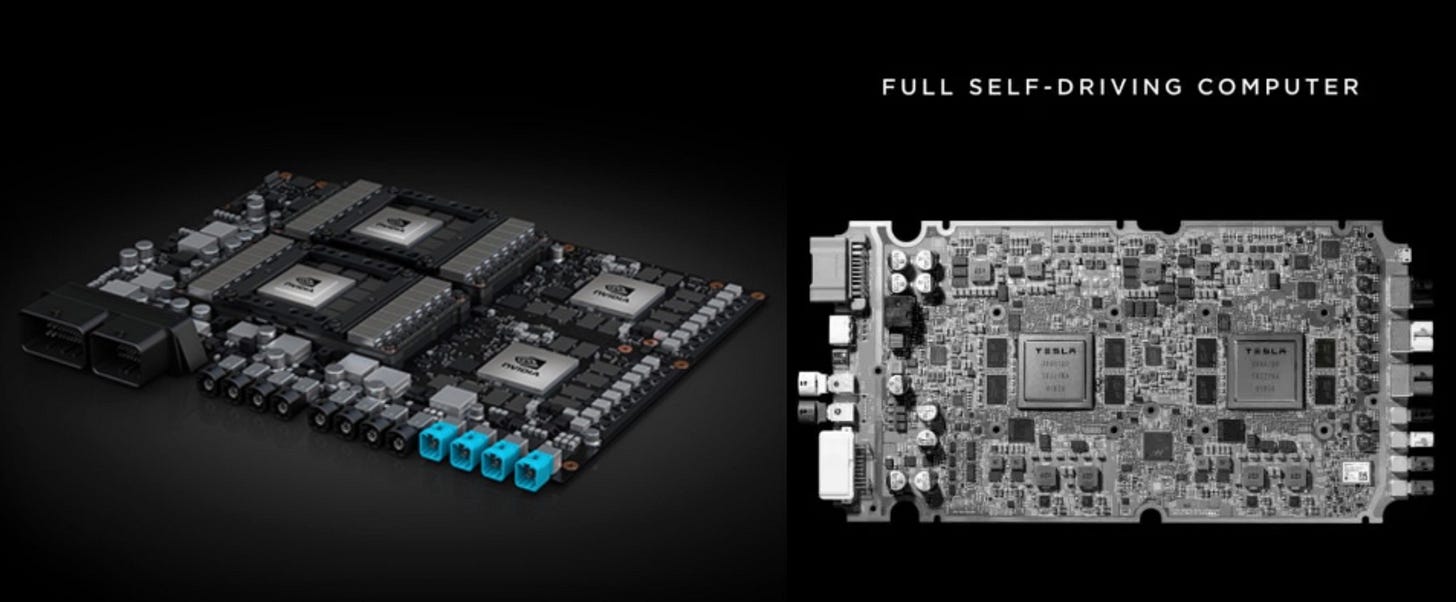

Tesla FSD Chip vs Competitors’ Chip (Mainly Nvidia’s Chip)

Tesla does not only have a software advantage (neural nets, dojo, and bird’s eye view) but also a hardware advantage. Tesla has a better CPU in comparison with the Nvidia Drive AGX chip that most of its competitors use.

The Nvidia Chip and the Tesla FSD Chip

Source: Electrek

Tesla’s chip is better than Nvidia’s chip in several respect. Firstly, it is likely going to cost Tesla less for its own chips compare to other competitors buying a Nvidia chip. This is because Tesla designed its own chip, whereas other companies need to pay Nvidia a IP premium. Secondly, the Tesla chip is about half the size of the Nvidia chip, allowing the Tesla chip to draw less power, below 100 watts per mile, compare to the Nvidia chip, 500+ watts per mile. Thirdly, the Tesla chip is specifically designed for Tesla’s vision approach, and everything in the chip is customized in the way that Tesla wants. Nvidia’s chip in comparison is a more generalized approach, which can do some other things too, but offers no customization.

Tesla FSD Chip

Source: Tesla

The Tesla chip has a 64mb memory on the chip, whereas the Nvidia chip does not. This onboard memory allows the Tesla chip to work continuously at high speed while also decreasing power consumption. Although, the Nvidia chip have better specs, the real question around specs should be how much specs are needed to solve autonomous driving? Rather than general idea of the more powerful a chip is, the better, because the more powerful a chip is, the more power it consumes, which will have a negative impact on milage. Tesla believes that the FSD chip’s specification can get the job done and the specs that Nvidia offers are unnecessary. Furthermore, specification of a chip is not the sole determinant of performance. A notable example is the Apple Silicon M1 chip. The M1 chip on paper may not be better than other chips, however with vertical integration and specific design only for apple devices, the new apple M1 laptop performs incredibly well with long lasting battery life. This is exactly what Tesla is trying to achieve by designing both the hardware and software in house to optimize performance and minimize power consumption.

Although the Tesla FSD chip is better, it must be noted that there is nothing stopping Nvidia from also making this chip from a technical standpoint. However, Nvidia may not have the financial incentives to make a chip like Tesla FSD chip because it is tailored for Tesla’s need. It may be suitable for one car maker but not all other, which means that the demand will be capped. Furthermore, millions of dollars must be invested into development to produce a new chip. Nevertheless, if a car maker promises Nvidia that they are going to buy tons of that chip, then it may be making financial sense for Nvidia to produce a chip like Tesla’s. However, it seems unlikely that any legacy automakers are going to do so, and EV startups do not have enough scale now.

Competitors in Autonomous Driving

Currently, the biggest competitor in the US for Tesla’s FSD is Waymo. Waymo is using the Lidar approach and have already driven over 10 million miles autonomously. Waymo currently offers level 4 autonomy, meaning that their vehicles can perform all driving tasks under specific circumstances (geofencing is required). Waymo is the first company in the US to offer an autonomous taxi network that contains around 600 vehicles, in Phoenix (a geofenced area).

Source: Bloomberg

You may ask by now, if Waymo is already offering a ‘driverless taxi’ service in Phoenix, isn’t Tesla way behind Waymo? The key to this question again lies on the difference between the Lidar approach and the camera-based approach. Below is a graph which details the solution speed and scalability of the two approaches. For the Lidar approach, it is easy to start off but very hard to scale (meaning that reaching level 5 autonomy will be very difficult). In contrast, the camera approach is very hard to start off but easy to scale. The scalability limitation for the Lidar approach as aforementioned is due to the cost and time needed for HD mapping and the additional cost of an expensive Lidar. This is why Waymo only provides their service in Phoenix with 600 vehicles, because for Waymo to expand their service, significant costs and time are needed to expand their HD maps in different city and buying additional autonomous fleets.

Source: Ark Invest

Waymo’s story and scalability limitation is what all other autonomous driving players experiences because everyone except Tesla, all uses the Lidar approach. This implies that all other competitors will face the cost and time required for HD mapping and Lidar installations. Therefore, more players may come up with geofenced ‘driverless taxi’ service similar to Waymo’s current Phoenix service and operate at a relatively small scale.

On the flip side, Tesla with its camera-based approach means that once Tesla have perfected the neural nets of the FSD system (aka the brain of the car), a Tesla can instantly be transformed into an autonomous driving car via over the air software updates. This can be done in any parts of the world as no HD maps are required. Tesla has chosen a much harder camera-based approach to begin with, but if perfected, the consequential gains will be significantly higher too.

Therefore, the golden question is, can Waymo and other competitors HD map the world while Lidar costs also decreases significantly in the near term? Or can Tesla perfect their neural nets and AI decision making first? Personally, I believe that Tesla should first solve level 5 autonomy. There are two main reasons. Firstly, Tesla’s new FSD beta is already on par with most other competitors’ autonomous driving solutions, if not better (maybe except Waymo and Baidu). Tesla with a harder start but easier finish’s approach is already on par with others’ easier start but harder finish’s approach, means that Tesla is in fact ahead of the competition. Secondly, Waymo’s Phoenix service was started in 2019, however, Waymo has yet to expand their service to any other locations. This illustrates the huge scalability limitation of the Lidar approach.

I would like to end this autonomous driving discussion with my not-so-great graph below. The graph is how I believes the autonomous driving space is going to play out. Waymo has already reached level 4 autonomy for a while. However, it would take a long time before Waymo reaches level 5 autonomy (no geofenced). In contrast, Tesla have yet to reach level 4 autonomy, but Tesla’s FSD beta is improving exponentially. This is because Tesla’s camera-based approach is based on AI, and like any AI project, the start is always the most difficult, and once certain infliction point is met, Tesla’s FSD shall improve exponentially. Therefore, I believe that it is likely that Tesla will reach level 5 autonomy several years (or even more) ahead of the competition.

This ends Part 2, the discussion on Tesla FSD and autonomous driving. To learn more about autonomous driving development, I would like to encourage you to go onto YouTube and watch some videos about Tesla FSD beta and Waymo’s autonomous driving. Please have a look and determine the difference yourself!

Please refer to Part 3 for Tesla energy, I hope to see you there!